Žurnalistikos tyrimai ISSN 2029-1132 eISSN 2424-6042

2022, 16, pp. 39–71 DOI: https://doi.org/10.15388/ZT/JR.2022.2

Artificial Intelligence and Fake News

Fadia Hussein (Corresponding Author)

Researcher, Arab Association for Research & Communication Sciences, Beirut, Lebanon

Email: fadiahussein3@gmail.com

Hussin J. Hejase

Consultant to the President, Professor of Business Administration, Al Maaref University, Beirut, Lebanon

Abstract. Artificial intelligence depends on digital devices’ performance to perform tasks regularly, requiring human intelligence, using special software to accomplish work easier and faster, carrying out data-packed tasks, and providing useful analytics or solutions. It also requires a specialized laboratory that provides high-performance computing capabilities and a technical platform for deep machine learning. These resources will enable the artificial intelligence platform to master the machine learning techniques of using, developing, simulating, predicting models, and building ready-to-use technological solutions such as analytics platforms.

In general, the artificial intelligence system manipulates and manages large amounts of training data to form correlations and patterns used in building future predictions . A limited-memory artificial intelligence system can store a limited amount of information based on the data that have been processed and dealt with previously to build knowledge by memory when combined with pre-programmed data. Consequently, one may ask how artificial intelligence applications contribute to verifying the truthfulness of the media through digital media. How do they contribute to preventing the spread of misleading and false news?

This study tries to answer the following question: What methods and tools are adopted by artificial intelligence to detect fake news, especially on social media platforms and depending on artificial intelligence laboratories?

This paper is framed within automation control theory and by defining the needed control tools and programs to detect fake news and verify media facts.

Keywords: Artificial intelligence, fake news, machine learning, techniques, data.

Received: 2022/12/15. Accepted: 2023/01/30

Copyright © 2022 Fadia Hussein, Hussin J. Hejas. Published by Vilnius University Press. This is an Open Access article distributed under the terms of the Creative Commons Attribution Licence (CC BY), which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Introduction

Hawking (2016) posits that “The rise of powerful Artificial Intelligence (AI) will be either the best or the worst thing ever to happen to humanity. We do not yet know which.” In a world filled with contradictions, information and communications technology (ICT) in its most advanced versions may contribute to practically answering Hawking’s standing question. The current research will shed light on the dark side of media, specifically on the generation and spreading of fake news. AI could detect and generate fake news. According to Shao (2020), “Peter Singer, cybersecurity and defense-focused strategist and senior fellow at New America, defines ‘deepfake’ as the technology used to make people believe something is real when it is not.” Two words ‘deep learning’ and ‘fake’ combine to make ‘deepfake’ and is a form of AI.

Deepfake technology has advanced and can now create convincing images. The best way to spot them is to look for the source of the video (Evon, 2022). Hence, after being accused of spreading misinformation (Roszell, 2021), AI presents itself as an effective solution tool to assure data veracity and identify fake news (Ariwala, 2022). Moreover, AI has gained more importance in handling misinformation with the continuous and significant growth of the volume of data. It “challenges the human ability to uncover the truth and makes it easy to learn behaviors, possibly through pattern recognition, harnessing artificial intelligence’s power” (ibid).

The simple meaning of fake news is “to incorporate information that leads people to the wrong path. Nowadays, fake news is spreading like water and people share this information without verifying it. This is often done to further or impose certain ideas and is often achieved with political agendas” (Bhikadiya, 2020, para 3). The amount of data exchanged on the internet and more specifically on social media networks is growing exponentially, threatening the credibility of these social networks (Lahby, Pathan, Maleh, et al., 2022). For example, social media sites like Facebook, Twitter, and others have all been accused of spreading fake news (Roszell, 2021).

Regarding fake images and videos, according to a recent study by Pew Research Center (2019), “most adults think that altered videos and images create a great deal of confusion about the facts of current events” (Gottfried, 2019, para 2). Facebook presents itself as a pioneer in handling fake news with trillions of user posts. “The act of sharing also lends credibility to a post, when other people see it, they register that it was shared by someone they know and presumably trust to some degree, and are less likely to notice whether the source is questionable” (The Conversation, 2018). Nevertheless, when one sees an enraging post, he/she must investigate the content rather than share it immediately. However, Facebook realized that AI is superior to manual fact-checking where the second would not be helpful in solving the problem of fake news.

AI is being leveraged to “find words or even patterns of words that can throw light on fake news stories” (Singh, 2017, para 6). Besides, “several solutions and algorithms using machine learning (ML) have been proposed to detect false news generated by different digital media platforms” (Singh, 2017, para 7)

The Conversation site (2018) reported that “Social media sites like YouTube and Facebook could voluntarily decide to label their content, showing clearly whether an item purporting to be news is verified by a reputable source” (para 13). It also reported that “Zuckerberg told Congress he wants to mobilize Facebook users’ community to direct his company’s algorithms. Facebook could crowd-source verification efforts. Wikipedia also offers a model of dedicated volunteers who track and verify information” (The Conversation, 2018, para 13).

In fact, “Facebook has been working with four independent fact-checking organizations—Snopes, Politifact, ABC News, and FactCheck.org—to verify the truthfulness of viral stories” (Marr, 2018, para 8). In addition, “Facebook recently announced its plan to open two new AI Labs that will work on creating an AI safety net for its users, tackling fake news, political propaganda as well as bullying on its platform” (ibid, para 8).

Research questions

This study aims to answer one question besides a set of sub-questions. These are as follows:

Main research question:

What methods and tools are adopted by AI to detect fake news, especially on social media platforms, depending on artificial intelligence laboratories?

Research sub-questions

1. On what does AI rely on carrying out tasks?

2. How does AI build knowledge?

3. Does AI provide ready-to-use technology solutions?

Theoretical Foundation

Automation advancements are the result of the continuous development of several fields like mechanics and fluidics, civil infrastructure, machine design, and especially the development of computers and information and communications technology (ICT) since the 20th century.

In its general sense, automation implies “operating or acting or self-regulating, independently, without human intervention” (Nof, 2009, p. 14). In the early years of the 20th century, automation has been associated with the replacement of human workers by technology (Hejase, 1999a). Human involvement with automation has been carefully studied, knowing that such a human-machine association extends beyond machines to include tools, devices, installations, and systems that are all platforms developed to perform given tasks (Hejase, 1999b). Therefore, “in its modern meaning automation could be viewed as a substitution by mechanical, hydraulic, pneumatic, electric, and electronic devices for a combination of human efforts and decisions” (Nof, 2009, p. 14).

“Human intelligence implemented on machines, mainly through computers and communication, became an important part of automation. As human-computer interaction impacts the sophistication of automatic control and its effectiveness by its progress, enabling the development of systems, platforms, and interfaces that support humans in their roles as learners, workers, and researchers in computer environments” (Ibid, pp. 22, 27, and 1555).

Barfield (2021) posits that “control theory may be used to shed light on the relationship between system components and can help to explain how feedback between system components serves to control a system” (p. 564). Barfield proposes that “factors that control a system should be of interest not only to engineers and computer scientists tasked with designing AI systems but also to other fields’ scholars with an interest in how AI should be managed’ (p. 564). Automation Control Theory emphasizes the reduction of human intervention, and it aims to control machines and processes by using control systems in concert with other information technology applications that aid in collecting, verifying, and providing useful analytics. This research will use automation control theory that leads to defining the control tools and programs needed to detect fake news and verify media facts as solutions to a large database and analytical problems.

Methodology

This paper relies on a qualitative, descriptive approach. It looks into the techniques adopted by artificial intelligence tools used in detecting fake news. It asks how these (techniques or tools are the ones that have been used? Restate.) have been used through applications, contributing to preventing the spread of misleading and fake news, images, and videos.

This study relies on secondary data studies to complete the purpose of this research (Hejase and Hejase, 2013, p. 114), using journal articles, books, reports, and technical websites to provide all the needed data to answer the research questions on hand.

Discussion

Is the best way to combat fake news using an automated tool?

Powered by Artificial Intelligence (AI) and Machine Learning (ML) algorithms, this study will try to examine three (3) tools that proved effective in debunking false news items: Snopes, Pheme, and Botometer; as well as three (3) tools that are used to verify fake images and videos: Google Reverse Image Search, TinEye, and InVID Verification Plugin.

Artificial Intelligence

McCarthy (2022) defines artificial intelligence as “The science and engineering of making intelligent machines, especially intelligent computer programs. It is related to the similar task of using computers to understand human intelligence, but AI does not have to confine itself to biologically observable methods” (para 1). Kaplan and Haenlein (2019) define it as “a system” with the ability to interpret external data correctly, to learn from such data, and to use those learnings to achieve specific goals and tasks through flexible adaptation” (p. 339). Moreover, Russell and Norvig (2009), cited in Tai (2020), posit that “The term AI describes these functions of human-made tool that emulates the “cognitive” abilities of the natural intelligence of human minds” (p. 339). Based on the above, Roszell (2021) contends that “by producing indistinguishable reports from what humans would create, AI can also make realistic-looking graphs and charts, creating any scenario it wants, which could be detrimental to society in many ways” (para 3-4). For example, computers can analyze and recognize an individual’s “voice and facial expressions, then produce a convincing video of that person saying anything” (Watson, 2018). “AI could even fabricate a celebrity death from drugs, encouraging others with addictions to take the same path, and to get more views for their site, affecting negatively society by encouraging others to do unthinkable things out of rage or emotional provocation” (Roszell, 2021, para 9). The many existing examples suggest that “we could already be under the influence of AI manipulation, to the degree wars could be provoked” (ibid, para 11). Consequently, “trying to compete with fake news is a sad concept. It’s challenging enough to compete with all the people online, and when you don’t get those views or reads, it generally makes us, in a way, depressed” (para 13). Then, according to Susala (2018), “[p]utting technology in an arms race with itself is the biggest challenge of using AI to detect fake news. AI is used to identify AI-created fakes. For example, techniques for video magnification can detect changes in a human pulse that would establish whether a person in a video is real or computer-generated” (para 10-11). However, some productions are fakes, these could be so highly sophisticated that they are hard to rebut or dismiss. AI facilitates learning behaviors possibly through pattern recognition, harnessing AI’s power.

Vertical or Horizontal Artificial Intelligence

Artificial Intelligence services are classified into Vertical or Horizontal Artificial Intelligence. These two AI ways “are different yet equally important and complementary ways” (Ada, 2021, para 1). Vertical AI is “usually related to a specific problem in a specific industry. It is trained exclusively on that industry-specific data. Vertical AI companies are focused on mastering a single, large use case like Waymo and Vara” (ibid). Ariwala (2022) agrees that “Vertical AI services focus on the single job, whether scheduling meetings, automating repetitive work, and performing just one job and do it so well, that we might mistake them for a human” (para 8).

On the other hand, horizontal AI “can be applied across different industries and can handle multiple tasks. This type of AI is often inspired by one’s knowledge of how the human brain works while solving an issue. Its goal is to build complex algorithms that can perform diverse tasks from a common core. AI is an automated decision-making system, which continuously learns, adapts, suggests, and takes actions automatically. For instance, Apple’s Siri or Amazon’s Alexa are examples of horizontal AI applications” (Ada, 2021, para 2). Ariwala (2022) provides the following examples for Horizontal AI services: “Cortana, Siri, and Alexa which work for multiple tasks and not just for a particular task entirely” (para 9).

In summary, “AI is an automated decision-making system, which continuously learns, adapts, suggests, and takes actions automatically. At the core, it requires algorithms that can learn from their experience. This is where Machine Learning comes into the picture” (Ariwala, 2022, para 10).

Machine Learning

Machine Learning (ML) is a subset of Artificial Intelligence, “which enables machines to learn from past data or experiences without being explicitly programmed” (Javatpoint, 2021, para 10).

Differing from the traditional approach, ML “enables a computer system to make predictions or take some decisions using historical data without being explicitly programmed. So, by using a massive amount of structured and semi-structured data, the Machine Learning model will generate accurate results or shall make predictions” (Javatpoint, 2021, para 11). As a result, Machine Learning is utilized in many applications for an “online recommender systems, Google search algorithms, Email spam filters, Facebook Auto friend tagging suggestion, etc...” (Ibid). ML has three types of learning: “Supervised, unsupervised, and reinforcement” (Bhikadiya, 2020, para 2). Bhikadiya posits that supervised learning “means training a specific model with labeled examples so the machine first learns from those examples and then performs the task on unseen data” (para 3). That is, “an input is provided as a labeled dataset, the model can learn from it to provide the result of the problem easily” (Arora, 2020, para 3). On the other hand, “unsupervised learning is capable of detecting latent groups or representations in a feature space referred to as clustering. Such learning partitions data points into groups without having to rely on the label or truth data. This label is a required input to the classification algorithms” (Parlett-Pelleriti, Stevens, Dixon, and Linstead, 2022, p. 2). Moreover, Parlett-Pelleriti et al. assert that “the algorithms themselves do not attempt to ascribe meaning to the clusters; i.e., left to human analysts. Instead, algorithms simply report the most likely groups explained by the data. The meaning of those groups must be determined by domain experts” (p. 2). One example of unsupervised learning is recommendation engines which are on all e-commerce sites or also on the Facebook friend request suggestion mechanism. According to Lu, Wu, Mao, et al. (2015), “Recommender systems were first applied in e-commerce to solve the information overload problem caused by Web 2.0 and were quickly expanded to the personalization of e-government, e-business, e-learning, and e-tourism. Nowadays, “recommender systems are an indispensable feature of Internet websites such as Amazon.com, YouTube, Netflix, Yahoo, Facebook, Last. fm, and Meetup” (Sarker, 2021, p. 162; Zhang, Lu, and Jin, 2021, p. 440). Finally, Kaelbling, Littman, and Moore (1996) define reinforcement learning as “a type of machine learning algorithm that enables software agents and machines to automatically evaluate the optimal behavior in a particular context or environment to improve its efficiency.” According to Arora (2021), “These types of learning algorithms are applied in Robotics, Gaming, etc.” (para 7).

Identifying fake news

Ariwala (2022) contends that “Fake news is the outcome of presenting information incorrectly or the information does not represent the facts expected to be carried out” (para 4). Also, Bhikadiya (2020) posits that “Fake news is incorporating information that leads people to the wrong path. The aim is to further or impose certain ideas and is often achieved with political agendas. However, for media outlets, the ability to attract viewers to their websites is necessary to generate online advertising revenue, so it is necessary to detect fake news” (para 4-5).

On the other hand, currently, AI is considered the cornerstone to separate the good from bad; in the news field, i.e., because AI makes it easy to learn behaviors, possible through pattern recognition. Harnessing AI’s power leads to identifying fake news by taking a cue from articles flagged as inaccurate by people in the past” (Ariwala, 2021, para 6). Several techniques have been identified using AI and ML to detect fake news. Among the various techniques, four are identified and depicted in Exhibit 1.

Exhibit 1: Fake news fighting techniques (Ariwala, 2021, para 10-14)

|

1. Score Web Pages Google pioneered this method. Scoring web pages depends on the accuracy of facts presented. The technology’s significance has increased as it attempts to understand the pages’ context without relying on third-party signals. 2. Weigh Facts Facts are weighed against reputed media sources using AI. An NLP engine goes through the subject of a story, headline, main body text, and geolocation. AI will also find out if other sites report the same facts. 3. Predict Reputation Website’s reputation is predicted considering ‘domain name and Alexa web rank’ via an ML model. 4. Discover Sensational Words Audiences’ attention is easily captured by the attractiveness of headlines in news items. Fake news headlines are discovered and flagged by using keyword analytics powered by AI. |

Artificial intelligence tools to spot fake information

Kiely and Robertson (2016) contend that “Fake news is nothing new... A lot of the viral claims aren’t “news” at all, but fiction, satire, and efforts to fool readers into thinking they’re for real” (para 1, 4). In fact, “Snopes.com has been exposing false viral claims since the mid-1990s, whether that’s fabricated messages, distortions containing bits of truth and everything in between” (para 3). According to News Co/Lab’s research (2019), “more than one-third of people surveyed could not identify a fake headline” (para 1). Therefore, if readers are unsure if a piece of news is real or not, several resources exist to check the information and the reliability of the sources found on the internet. Next, this article provides a brief review of one such tool.

A. Snopes is a website that helps spot fake stories.

News Co/Lab’s blog (2019) reports that since 1994 “Snopes has been used extensively to rate claims, articles, social media posts, images, and videos on their validity. Instead of categorizing the target by “true or false” ratings, Snopes uses more specific categories like: True, false, mixture, mostly true, mostly false, outdated, misattributed, miscaptioned, and more” (para 9). Moreover, Snopes provides interested users with a list of fake news sites. “Snopes is recognized by the International Fact-Checking Network, as one of News Co/Lab’s Best Practices” (Rosenthal, 2018).

Snopes prides itself on its “fact-checking and original, investigative reporting lights the way to evidence-based and contextualized analysis. And links to and documents their sources, so readers are empowered to do independent research and make up their minds” (Snopes, 2022a, para 1).

Snopes initiated its processes in 1994, “investigating urban legends, hoaxes, and folklore. Its founder is David Mikkelson” (ibid, para 2). The website “Snopes.com” is an independent publishing site owned by Snopes Media Group.

Process

According to Snopes (2022b), the process follows these steps:

1. Each entry is assigned to an editorial staff member. He/she performs a preliminary investigation and writes a first draft of the fact check.

2. Contacting the source of the claim triggers the research, i.e., the staff member and the source elaborate and agree on the supporting information.

3. Snopes contacts expert individuals and organizations who have relevant expertise in the subject at hand.

4. According to Palma (2022), “Snopes runs a search out of secondary data (including news articles, scientific and medical journal articles, books, interview transcripts, and statistical sources) with a bearing on the topic.”

5. The topics’ nature and complexity necessitate the expertise and editing by other members of the editorial staff besides carrying out additional research.

6. Snopes’ editorial intervention: The outcome is handled by at least one editor.

7. Follow-up: Any piece that does not completely fit Snopes’ standards is subject to more investigation by one or more editors before being released for publication.

Reliability

Snopes prides itself on the comments of users and media among others. Here are some excerpts (Snopes, 2022b):

BBC News describes Snopes as “the go-to bible for many fact-checkers.” (para 8)

“Patricia Turner, professor of folklore at UCLA, told the Los Angeles Times: Anything that raises hairs on the back of my neck, I go to Snopes.” (para 9)

FactCheck.org wrote, “Do the Snopes.com articles reveal a political bias?” (para 10)

Popular Mechanics in 2019: “The 50 Most Important Websites of All Time includes Snopes.” (para 11).

B. Pheme has made a technological leap to read the veracity of user-generated and online content. Social networks are rich with doubtful information, lies, fake news, deception, half-truths, and facts. Social networks are rife with memes. The Oxford dictionary (2022), defines a ‘meme’ as an “Element of a culture or system of behavior passed from one individual to another by imitation or other non-genetic means… Also means an image, video, piece of text, etc..., typically humorous in nature, that is copied and spread rapidly by Internet users, often with slight variations” (para 1-2). But irrespective of a meme’s truthfulness, “the rapid spread of such information through social networks and other online media can have immediate and far-reaching consequences. In such cases, large amounts of user-generated content need to be analyzed quickly, yet it is not currently possible to carry out such complex analyses in real-time” (Pheme, 2022, para 1).

Consequently, Pheme took charge with partners from seven countries in the fields of “natural language processing and text mining, web science, social network analysis, and information visualization” (para 6). Pheme’s project combines “big data analytics with advanced linguistic and visual methods. The results are suitable for direct application in medical information systems and digital journalism” (para 2).

Process (applied to Twitter rumors case)

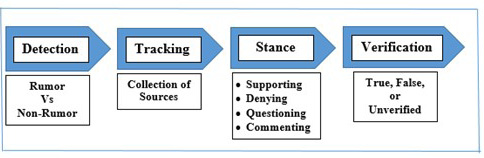

Kochkina, Liakata, and Zubiaga (2018a) proposed Zubiaga et al.’s (2018) rumors classification system. According to Kochkina et al. (2018a), “A rumor classification system is expressed as a sequence of subtasks, namely rumor detection, rumor tracking, rumor stance classification leading to rumor verification. See Figure 1 for an illustration.

Figure 1. Pheme process

Source: Zubiaga, Aker, Bontcheva et al., 2018, p. 32:13; Kochkina, Liakata, and Zubiaga, 2018a, p. 3404.

Kochkina et al. (2018a; 2018b) used a Pheme dataset that contains a collection of Twitter rumors and non-rumors posted during breaking news. The data is structured as follows:

1. Each event has a directory, with two subfolders, rumors and non-rumors. These two folders have folders named with a tweet ID.

2. A data source is defined: The tweets can be found on the ‘source-tweet’ directory of the tweet in question. This dataset is an extension of the PHEME dataset of rumors and non-rumors (https://figshare.com/articles/PHEME_dataset_of_rumours_and_non-rumours/4010619) (Kochkina et al., 2018b).

3. Data reactions to tweets collected or found in the source. The directory ‘reactions’ has the set of tweets responding to that source tweet. The site above contains rumors related to nine (9) events with each of the rumors annotated by its veracity value, either True, False, or Unverified.

4. Classification based on veracity. Each folder contains ‘annotation.json’ with information about the veracity of the rumor and ‘structure.json’ with information about the structure of the conversation.

Reliability

According to Pheme (2022), “the European Commission financed the Pheme project since the beginning in 2013” (para 12). Therefore, EC countries have adopted Pheme and consider it a reliable tool.

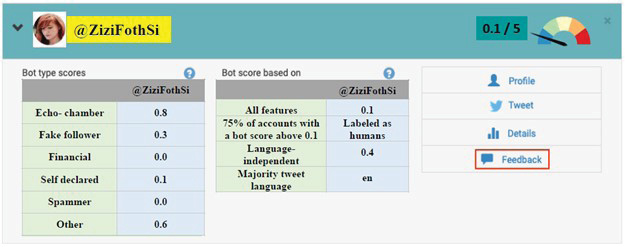

C. Botometer

Indiana University created Botometer (formerly “BotOrNot”) as a response to the prevalence of fake bots on Twitter. A Botometer is a free tool that “checks the activity of a Twitter account. It checks the likelihood that it is using automation (bots) and gives it a score. Higher scores mean more bot-like activity” (Twitter, 2020; Botometer, 2022a). The use of this service requires Twitter authentication and permissions. Davis, Varol, Ferrara, et al. (2016) experimented on the former version ‘BotOrNot’, describing it as a “publicly-available service that leverages more than one thousand features to evaluate the extent to which a Twitter account exhibits similarity to the known characteristics of social bots. Since its release in May 2014, BotOrNot has served over one million requests via our website and APIs” (p. 273).

Process

According to Botometer (2022b), “A botometer is a machine learning (ML) algorithm trained to calculate a score where low scores indicate likely human accounts and high scores indicate likely bot accounts” (para 7). The score is computed using the following process (itemized by the researcher from Botometer, 2022b):

1. When checking an account, the browser fetches its public profile and hundreds of its public tweets and mentions using the Twitter API.

2. This data is passed to the Botometer API, which extracts over a thousand features to characterize the account’s profile, friends, social network structure, temporal activity patterns, language, and sentiment.

3. The user may also label content based on selected hashtags or other features.

4. The user selects an ML model or the features are used by various ML models to compute the bot scores.

5. Data are not retained by the Botometer other than the account’s ID, scores, and any feedback optionally provided by the user.

6. Interpretation of scores

Using Botometer to classify MRIs of the fetus

Figure 2. Images of MRIs of Fetus

Source: Katie (2019)

Checking by botometer:

Account: Katie account @ZiziFothSi

Joined Twitter on October 2009

The number of followers: 13.8K by June 21, 2022.

No. of followed accounts: 1,142 following

Figure 3. Botometer results

The Botometer test is retweeted with various comments by other community users. For example, Dapcevich (2022) states: “These ghoulish images once again rose to popularity in mid-May 2022 when the Twitter account @dhomochameleon once again shared the three images, which this time around garnered more than 61,000 retweets and nearly half a million likes on the social media platform…Spooky, right? Don’t say we didn’t warn you. The scariest part? The above images are real” (para 5).

Reliability

Young Scot site (2022) reports that “many companies utilize bots to help answer customer queries. At the same time, bots are also used by some people for malicious reasons like spreading misinformation and fake news online” (para 2). In addition, “Bots, also called internet robots, spiders, crawlers, or web bots, are programs designed to do a specific task. Whether a particular bot is ‘good’ or ‘bad’ depends on what the person creating it has programmed it to do” (ibid, para 3). Indiana University has been very active in combatting fake news using several tools. Among these, the Botometer created by Osome. In fact, “The Observatory on Social Media (OSoMe, pronounced awe•some) is a joint project of the Center for Complex Networks and Systems Research (CNetS) at the Luddy School, the Media School, and the Network Science Institute (IUNI) at Indiana University. OSoMe unites data scientists and journalists in studying the role of media and technology in society and building tools to analyze and counter disinformation and manipulation on social media” (OSOME, 2022, para 1).

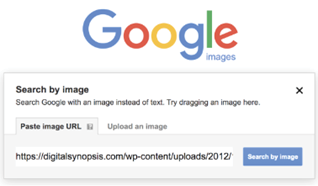

D. Google Reverse Image Search

Misleading photos, scams, and people’s use of one’s images without permission are frequent nowadays on social media. Ho (2018) posits that “A lot of the fake news comes in the form of pictures. Photos that try to convey some information but are actually from another entirely unrelated incident are common on social media” (para 4). Consequently, ‘tools to debunk all malicious activities are needed. Google Reverse Image Search is an example of the needed tools” (Hautala, 2022, para 4). Figures 4 and 5 illustrate web sites that help in reverse image searching.

Figure 4. Google reverse image search

Source: Fach, 2020.

Figure 5. Reverse image search

Source: http://www.reverse-image-search.com

According to Robbins (2022), “The reverse image search platform takes the lead with image numbers, offering over 41.9 billion images and continues to expand” (para 7). Also, Reverse Image Search (2022) reports, “These tools are used as image source locators or image source finders, for obtaining higher resolutions of similar images, looking for images from varying sources, looking for a particular location in the image, and getting the details of an image” (para 2).

Process as reported by Hautala (2022, para 11-15)

“1. The process starts with a browser (i.e., open Google Images on Safari, Firefox, or Chrome). Then, follow the options depicted in Figure 6.

2. Reverse image searches (relying on either Google’s Images or Lens service) provide a list of websites displaying the photo or image and a link and description” (para 11-15).

Figure 6. Reverse image search process

Source: Hautala, 2022, para 11-15.

Reliability

“Journalists can use the reverse search option to find the source of an image or to know the approximate date when a picture was first published on the Internet” (Labnol, 2022, para 4).

Reverse Image Search: “Is an image retrieval query technique that is content-based and the sample image is given to Content-based image retrieval (CBIR) system, and then the search is centered upon the sample image and formulating a search query, in terms of data retrieval” (Latif, Rasheed, Sajid, et al., 2019, p. 2; Content Arcade, 2022, para 2). Two tools are shown earlier (Figures 4 and 5) namely ‘Google reverse image search and ‘Reverse image search’ that lead to similar results.

E. TinEye

Robbins (2022) posit that “In a single day, a whopping total of 300 million photos find their way to the web. It can be tough to figure out who claims rightful ownership of what picture” (para 2). TinEye is another image retrieval query tool. Robbins (2022) contends that “TinEye was the first of its kind, using image identification technology to operate” (para 7). TinEye is “an image search and recognition company. It has expertise in computer vision, pattern recognition, neural networks, and machine learning” (TinEye, 2022). “TinEye can quickly find copyright violations, detect image fraud, and shows if a picture was changed, modified, or resized from its original state” (Robbins, 2022, para 8).

Process

TinEye search uses the ‘Reverse Image Search’ technique or can search by image. See Figure 7.

Option 1. Uploading an image to the TinEye search engine.

Option 2. Searching by URL.

Option 3. Simply drag and drop images to start the search.

Figure 7. TinEye

Source: Robbins, 2022

Reliability

“TinEye constantly crawls the web and adds images to its index. Today, the TinEye index is over 56.5 billion images” (TinEye, 2022).

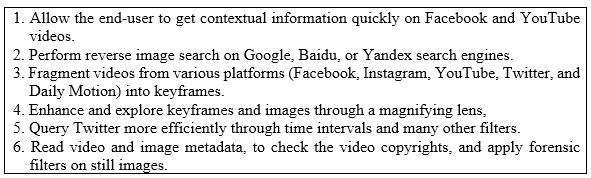

F. InVID

With the rapid spread of fake news and disinformation, besides other means, to confuse social media users, many tools with a focus on the fight through “Human, Crowd, and Artificial Intelligence” have been developed (InVid, 2022).

InVID’s vision capitalizes on its “innovation action to develop a knowledge verification platform to detect emerging stories and assess the reliability of newsworthy video files and content spread via social media” (InVid, 2022a). This project was funded by the European Union (InVid, 2022b).

Process and Reliability

InVid platform “enables novel newsroom applications for broadcasters, news agencies, web pure-players, newspapers, and publishers to integrate social media content into their news output. They do not have to struggle to know if they can trust the material or how they can reach the user to ask permission for re-use” (InVid, 2022b).

InVid platform provides tools that are multi-functional as depicted in Exhibit 2, quoting content from InVid (2022c, para 2).

To access the InVid plugin (see Figure 8):

• “Open InVID launches the plugin

• Video URLs display the URL of a video presentation on a web page

• Image URLs display the URL of an image present on a web page” (InVid, 2022c, para 3)

Figure 8. InVid Verification Platform

Source: https://www.invid-project.eu/tools-and-services/invid-verification-plugin/

Exhibit 2: InVid platform available functions

Source: InVid, 2022c, para 2.

Conclusion

This study responds to the research’s main question “What are the methods and tools adopted by artificial intelligence to detect fake news? Especially, on social media platforms, depending on artificial intelligence laboratories.” Moreover, this paper descriptively assessed six methods and tools from different aspects: Technically, process-wise, and beneficially as well as their roles in mitigating fake news, misinformation, and unethical use of photos, pictures, and videos on social media sites. Hence, it adds insight to end-user journalists, reporters, content writers, and media firms.

Although the tools and methods discussed in this paper originated from artificial intelligence and machine learning algorithms, the following urgent question, “Can artificial intelligence help end fake news?” continues to present itself.

Many researchers around the world contributed and continue to contribute with best practices and newly developed methodologies and approaches to answer the set question. However, as technology evolves at a quicker pace, its applications are as well accelerated. But the issue of ethical use remains a challenge (Cassauwers, 2019). Next, three continuing challenges and possible solution projects are presented (among many other existing cases):

1. The Fandango Project

“FANDANGO aims to aggregate and verify different typologies of news data, media sources, social media, open data, to detect fake news and provide a more efficient and verified communication for all European citizens” (CORDIS, 2022).

The FANDANGO project started on January 1st, 2018 and ended on March 31st, 2021. Ended achieving its aim “to break data interoperability barriers providing unified techniques and an integrated big data platform to support traditional media industries to face the new “data” news economy with a better transparency to the citizens under a Responsible, Research, and Innovation prism” (para 1). According to Cassauwers (2019) quoting Francesco Nucci, applications research director at the Engineering Group, Italy, “the project has three components: “The first is ‘content-independent detection’ using tools and methods [discussed in this paper beside other means]; the second component is “spotting false claims in the content’ referring to human fact-checkers, and look for online pages or social media posts with similar words and claims; the third dimension is allowing journalists to respond to fake news by pooling together national data to address claims or even applying data from the European Copernicus satellites” (Cassauwers, 2019, para 7-12).

2. The Global Disinformation Index (GDI)

It “collects data on how misinformation – or disinformation, when deliberate – travels and spreads. The GDI index, put out by a US-based non-profit organization, can help governments, media professionals, and other web users assess the trustworthiness of online content” (International Telecommunication Union (ITU), 2022, para3).

This index uses the latest AI tools and techniques. The GDI triages (methodizes, prioritizes, emphasizes, and orders) “unreliable content from several of the world’s most prominent news markets. Combining AI results with independent human analysis, the index then rates global news publications based on their respective disinformation risk scores” (ibid, para 5).

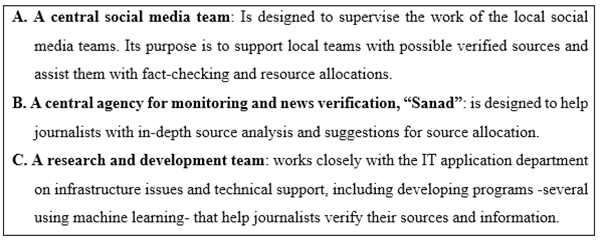

3. Al Jazeera Media Institute

Al Jazeera’s network consists of 16 channels and news portals. According to El Gody (2021), these entities “work independently when it comes to its organizational structure, editorial policy, and target audience. Al Jazeera network follows a “dynamic” structure with an integration of interest” (El Gody, 2021, p. 27). Knowing that “source verification and managing organizational resources is an acute dilemma.” Al Jazeera opted to use “AI, ML, and Natural language processing (NLP) to automate the process of identifying fake news to separate the ’truth’ from ‘fake’ in the news field” (El Gody, 2021, p. 5).

Al Jazeera deals with and verifies fake news and misinformation following several methods and tools depicted in Exhibit 3.

Exhibit 3: Al Jazeera network against fake news

Source: El Gody, 2021, pp. 27-28.

Concluding Facts and Recommendations:

• Fake news continues to rock the user world. There is a dire need for the continuous development of technologically advanced tools that exploit the power of AI, Big Data, and ML to mitigate, control, and possibly stop fake news authoring and dissemination, and to prevent the spread of misleading and false news.

• Artificial intelligence laboratories support the true capabilities of AI in combating fake news misinformation and building ready-to-use technology solutions and analytics platforms.

• AI, ML, and Big Data work together by integrating efforts to help detect and prevent fake news by collecting, supporting beneficial analytics, and verifying as solutions to a large database and analytical problems.

• AI has been able to successfully discern between human and machine-generated content (Botometer).

• Large companies dealing with technology and social media (for example, Facebook, Twitter, and U-tube) are working very hard on fake news automatic detection and identification using artificial intelligence

• There is a dire need to hire people with expertise to work with AI to verify data accuracy, because successful AI and ML tools rely on human experts and researchers to detect fake news like Snopes and Pheme.

Fake news isn’t just a media problem, but also a social and political problem. Its roots are in technology, which makes the social implications more significant, i.e., “increased political polarization, greater partisanship, and mistrust in mainstream media and government” (Anderson and Rainie, 2017). It is a known fact that solving the problem of fake news requires collaboration across disciplines. The best way to stop and eradicate fake news is “to depend on people, motivate them to be critical thinkers, and not take every story at face value” (Bouygues, 2019). It is also encouraged to use artificial intelligence techniques and tools to detect fake news before sharing any information, especially on social media platforms; in particular, if the post is emotionally based to effectively get the people’s attention. Vosoughi, Roy, and Aral’s (2018) research highlighted “the role emotions play when sharing news on social media and that reading true news mostly produces feelings of joyfulness, unhappiness, expectation, and trust. Also, reading fake news produces amazement, anxiety, shock, and repulsion. It is suggested these emotions and feelings play an important role when deciding to share something on social media” (p. 1146).

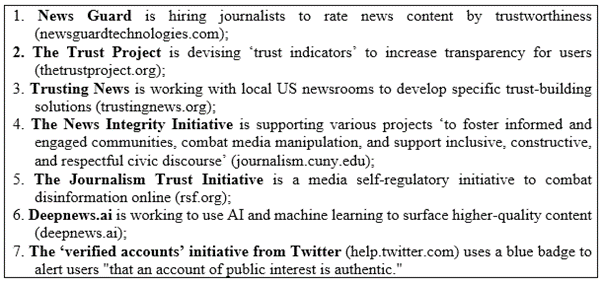

News organizations and volunteers in partnership with social media should train users on AI, making it easier for many people to tell facts from fake. That could decrease the chances that ‘fictional and misleading’ stories would gain popularity online. In support of such action, Exhibit 4 depicts the global move towards efforts integration to foster integrity and trust in combatting fake news.

Exhibit 4: Global ‘Trust’ initiatives

Source: Wilding, Fray, Molitorisz, and McKewon, 2018, p. 33

References

Ada. (2021, November 4) Why is a horizontal approach to AI the way to go?’. Available at: https://iris.ai/technology/why-is-a-horizontal-approach-to-ai-the-way-to-go-ai-across-domains/ (Accessed: 18 October 2022).

Anderson, J. and Rainie, L. (2017, October 19) The Future of Truth and Misinformation Online. Available at: https://www.pewresearch.org/internet/2017/10/19/the-future-of-truth-and-misinformation-online/ (Accessed: 23 October 2022).

Arora, S. (2020, January 29) Supervised vs Unsupervised vs Reinforcement. Available at: https://www.aitude.com/supervised-vs-unsupervised-vs-reinforcement/ (Accessed: 19 October 2022).

Barfield, W.A. (2021) ‘Systems and Control Theory Approach for Law and Artificial Intelligence: Demystifying the Black-Box’, J, 4, pp. 564–576. https://doi.org/10.3390/j4040041

Bhikadiya, M. (2020, October 5) The startup Fake News Detection Using Machine Learning. Available at: https://medium.com/swlh/fake-news-detection-using-machine-learning-69ff9050351f (Accessed: 22 June 2022).

Botometer. (2022a) Botometer: An OSoMe project (bot•o•meter). Available at: https://botometer.osome.iu.edu/ (Accessed: 20 October 2022).

Botometer (2022b) FAQ: Frequently Asked Questions. Available at: https://botometer.osome.iu.edu/faq#which-score (Accessed: 20 October 2022).

Bouygues, H.L. (2019, November) Fighting Fake News: Lessons From The Information Wars. Reboot Elevating Critical Thinking. Available at: https://reboot-foundation.org/wp-content/uploads/_docs/Fake-News-Report.pdf (Accessed: 23 October 2022).

Cassauwers, T. (2019, April 15) Can artificial intelligence help end fake news? Available at: https://ec.europa.eu/research-and-innovation/en/horizon-magazine/can-artificial-intelligence-help-end-fake-news (Accessed: 22 October 2022).

Content Arcade. (2022) Reverse Image Search [Blog]. Available at: http://www.reverse-image-search.com (Accessed: 21 October 2022).

CORDIS-European Union. (2022). FAke News discovery and propagation from big Data ANalysis and artificial intelliGence Operations. doi: 10.3030/780355. Available at: https://cordis.europa.eu/project/id/780355 (Accessed: 22 October 2022)

Dapcevich, M. (2022, June 2) Do These Images Show MRIs of Fetuses? When science becomes nightmarish. Available at: https://www.snopes.com/fact-check/mris-of-fetuses/ (Accessed: 20 October 2022).

DataReportal. (2022a, February 15). Digital 2022 Lebanon-February 2022 v01. Available at: https://www.slideshare.net/DataReportal/digital-2022-lebanon-february-2022-v01 (Accessed: December 12 2022)

DataReportal. (2022b, February 15). Digital 2022 Lebanon-February 2022 v01. Available at: https://datareportal.com/reports/digital-2022-lebanon?rq=lebanon (Accessed: 11 December 2022).

Davis, C.A., Varol, O., Ferrara, E., Flammini, A. and Menczer, F. (2016) ‘BotOrNot: A System to Evaluate Social Bots’. Proceedings of the 25th International Conference Companion on World Wide Web April 2016 Pages 273–274. https://doi.org/10.1145/2872518.2889302

El Gody, A. (2021) Using Artificial Intelligence in the Al Jazeera Newsroom to Combat Fake News. Al Jazeera Media Institute, Doha, Qatar. Available at: https://institute.aljazeera.net/sites/default/files/2021/Using%20Artificial%20Intelligence%20in%20the%20Al%20Jazeera%20Newsroom%20to%20Combat%20Fake%20News.pdf (Accessed: 23 October 2022).

Evon, D. (2022, June 8) How to Spot a Deepfake. Available at: https://www.snopes.com/articles/423004/how-to-spot-a-deepfake/?utm_term=Autofeed&utm_medium=Social&utm_source=Facebook#Echobox=1655933731 (Accessed: 18 June 2022)

Fach, M. (2020, July 30) ‘How to Do a Reverse Image Search (Desktop and Mobile)’, [Blog]. Available at: https://www.semrush.com/blog/reverse-image-search/ (Accessed: 21 October 2022).

Global Stat. (2022, November). Social Media Stats. Available at: https://gs.statcounter.com/social-media-stats/all/lebanon (Accessed: 12 December 2022).

Gottfried, J. (2019, June 14) About three-quarters of Americans favor steps to restrict altered videos and images. Pew Research Center. Available at: https://www.pewresearch.org/fact-tank/2019/06/14/about-three-quarters-of-americans-favor-steps-to-restrict-altered-videos-and-images/. (Accessed: 18 October 2022)

Hautala, L. (2022, April 6) Reverse Google Image Search Can Help You Bust Fake News and Fraud. Available at: https://www.cnet.com/news/privacy/reverse-google-image-search-can-help-you-bust-fake-news-and-fraud/ (Accessed: 21 October 2022).

Hawking, S. (2016, October 20) Stephen Hawking warns of dangerous. Available at: https://www.bbc.com/news/av/technology-37713942 (Accessed: 18 October 2022).

Hejase, H.J. and Sabra, Z. (1999a) ‘Is Lebanon Ready for Robotics?’ Proceedings of the Second International Al-Shaam Conference on Information Technology, Damascus, Syria, April 26-29, 1999. Available at: https://www.researchgate.net/publication/236883861_Is_Lebanon_Ready_for_Robotics (Accessed: 20 November 2022).

Hejase, H.J. (1999b) ‘Automation Technology and Management Attitude towards its Implementation: A Lebanese Case Study,’ Proceedings of the 11th. Arab International Conference on Training and Management Development, Cairo, Egypt, April 27-29, 1999. doi:10.13140/2.1.1417.5045. Available at: Accessed: 20 November 2022).

Hejase, A.J. and Hejase, H.J. (2013) Research Methods: A Practical Approach for Business Students. 2nd edn. Philadelphia, PA, USA: Masadir Incorporated.

Ho, E. (2018, January 14) 4 tips for spotting fake news, with Google Reverse Image Search and other online tools. Available at: https://www.scmp.com/yp/report/journalism-resources/article/3072927/4-tips-spotting-fake-news-google-reverse-image (Accessed: 21 October 2022)

International Telecommunication Union (ITU). (2022, May 2) How AI can help fight misinformation. Available at: https://www.itu.int/hub/2022/05/ai-can-help-fight-misinformation/ (Accessed: 22 October 2022).

InVid. (2022a) Invid project. Available at: https://www.invid-project.eu/ (Accessed: 21 October 2022).

InVid. (2022b) InVid Description. Available at: https://www.invid-project.eu/description/ (Accessed: 22 October 2022).

InVid. (2022c) InVid Verification Plugin. Available at: https://www.invid-project.eu/tools-and-services/invid-verification-plugin/ (Accessed: 22 October 2022).

Javatpoint. (2021) Difference between Artificial intelligence and Machine learning. Available at: https://www.javatpoint.com/difference-between-artificial-intelligence-and-machine-learning (Accessed: 18 October 2022)

Kaelbling, L.P., Littman, M.L. and Moore, A.W. (1996) ‘Reinforcement learning: a survey’. J Artif Intell Res. 4, pp. 237–85.

Kaplan, A. and Haenlein, M. (2019) ‘Siri, Siri, in my hand: Who’s the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence’, Business Horizons, 62, pp. 15–25.

Katie (2019) The real reason they discourage MRIs during pregnancy is that then people would realize they’re incubating nightmare demons and would be rightfully terrified’ [Twitter: @ZiziFothSi]. Available at: https://twitter.com/ZiziFothSi/status/1395039903440900101 (Accessed: 22 June 2022)

Kiely, E. and Robertson, L. (2016, November 18). How to Spot Fake News. Available at: https://www.factcheck.org/2016/11/how-to-spot-fake-news/ (Accessed: 18 October 2022].

Kochkina, E., Liakata, M. and Zubiaga, A. (2018a) ‘All-in-one: Multi-task Learning for Rumour Verification’. In Proceedings of the 27th International Conference on Computational Linguistics, pp. 3402–3413, Santa Fe, New Mexico, USA. Association for Computational Linguistics. https://aclanthology.org/C18-1288.pdf

Kochkina, E., Liakata, M. and Zubiaga, A. (2018b) ‘PHEME dataset for Rumour Detection and Veracity Classification. figshare. Dataset’. https://doi.org/10.6084/m9.figshare.6392078.v1

Labnol. (2022) Reverse Image Search. Digital Inspiration. Available at: https://www.labnol.org/reverse/ (Accessed: 21 October 2022)

Lahby, M., Pathan, S. K. and Maleh, Y, Mohamed, W., & Yafooz, S. (Eds). (2022) ‘Combating false news with computational intelligence techniques’, (first edition), Berlin, Germany: Springer.

Latif, A., Rasheed, A., Sajid, U., Ahmed, J., Ali, N., Ratyal, N.I., Zafar, B., Hanif Dar, S.D., Sajid, M. and Khalil, T. (2019) ‘Content-Based Image Retrieval and Feature Extraction: A Comprehensive Review’, Hindawi Mathematical Problems in Engineering 2019, pp. 1-21. Article ID 9658350. https://doi.org/10.1155/2019/9658350

Lu, J., Wu, D., Mao, M., Wang. W. and Zhang, G. (2015) ‘Recommender system application developments: a survey’. Decis Support Syst, 74, pp. 12–32.

Marr, B. (2018, May 16) Fake News And How Artificial Intelligence Tools Can Help. Forbes. Available at: https://www.forbes.com/sites/bernardmarr/2018/05/16/fake-news-and-how-artificial-intelligence-tools-can-help/?sh=30d694c6271d (Accessed: 18 October 2022)

McCarthy, J. (2022). What is AI? / Basic Questions. John McCarthy: Stanford University. Available at: http://jmc.stanford.edu/artificial-intelligence/what-is-ai/index.html#:~:text=What%20is%20artificial%20intelligence%3F,methods%20that%20are%20biologically%20observable (Accessed: 18 October 2022)

Napoleoncat. (2022a, November). Facebook users in Lebanon-November 2022. Available at: https://napoleoncat.com/stats/facebook-users-in-lebanon/2022/11/ (Accessed: 12 December 2022)

Napoleoncat. (2022b, November). Instagram users in Lebanon-November 2022. Avaialble at: https://napoleoncat.com/stats/instagram-users-in-lebanon/2022/02/#:~:text=There%20were%202%20300%20200,user%20group%20(733%20600). (Accessed: December 12).

Nof, S.Y. (2009) Springer Handbook of Automation. Springer Science & Business Media.

OSOME. (2022) OSoMe. Available at: https://osome.iu.edu/ (Accessed: 20 October 2022).

Oxford Dictionaries. (2022) meme. Available at: https://en.oxforddictionaries.com/definition/meme (Accessed 19 October 2022)

Palma, B. (2022, June 15) No, Vaccines Aren’t Linked to Sudden Adult Death Syndrome. Available at: https://www.snopes.com/fact-check/vaccines-sudden-adult-death-syndrome/ (Accessed: 21 June 2022)

Parlett-Pelleriti, C.M., Stevens, E., Dixon, D. and Linstead, E.J. (2022) ‘Applications of Unsupervised Machine Learning in Autism Spectrum Disorder Research: a Review’. Rev J Autism Dev Disord (2022), pp. 1-16. https://doi.org/10.1007/s40489-021-00299-y

Pheme. (2022) About Pheme. Available at: https://www.pheme.eu/ (Accessed: 19 October 2022).

Reverse Image Search. (2022) Reverse Image Search - Search by Image, Reverse Photo Lookup. Accessed at: https://www.reverse-image-search.com/ (Accessed: 20 November 2022).

Robbins, R. (2022) TinEye VS Google VS ImageRaider: Reverse Image Search Tools Compared. Available at: https://clictadigital.com/tineye-vs-google-vs-imageraider-reverse-image-search-tools-compared/ (Accessed: 21 October 2022).

Rosenthal, C. (2018, March 19) Best Practice: International Fact-Checking Network. Available at: https://newscollab.org/2018/03/19/best-practice-international-fact-checking-network/ (Accessed: 22 June 2022).

Roszell, O. (2021, December 8) AI Learned How to Generate Fake News, and It’s Terrifying. Medium: CodeX. Available at: https://medium.com/codex/ai-learned-how-to-generate-fake-news-and-its-terrifying-37cadc7e94a7 (Accessed: 22 June 2022)

Russell, S.J. and Norvig, P. (2009) Artificial Intelligence: A Modern Approach. Upper Saddle River, New Jersey: Prentice Hall.

Sarker, I.H. (2021) Machine Learning: Algorithms, Real-World Applications, and Research Directions. SN COMPUT. SCI. 2, pp. 160-181. https://doi.org/10.1007/s42979-021-00592-x

Shao, G. (2020, January 17) What ‘deepfakes’ are and how they may be dangerous. CNBC. Available at: https://www.cnbc.com/2019/10/14/what-is-deepfake-and-how-it-might-be-dangerous.html. (Accessed: 18 October 2022).

Singh, A.R. (2017) How Can Artificial Intelligence Combat Fake News? GeoSpatial World. Available at: https://www.geospatialworld.net/blogs/can-artificial-intelligence-combat-fake-news/ (Accessed: 18 October 2022).

Snopes. (2022a) About Us: Snopes is the internet’s definitive fact-checking resource. Available at: https://www.snopes.com/about/ (Accessed: 22 June 2022).

Snopes. (2022b) Frequently Asked Questions. Available at: https://www.snopes.com/faqs/#faq-question-1627191156282 (Accessed: 18 October 2022).

Susarla, A. (2018, August 2) How artificial intelligence can detect – and create – fake news. Available at: https://msutoday.msu.edu/news/2018/how-artificial-intelligence-can-detect-and-create-fake-news (Accessed: 17 October 2022).

Tai, M.C. (2020) ‘The impact of artificial intelligence on human society and bioethics’, Tzu Chi Med J., 32(4), pp. 339-343. doi: 10.4103/tcmj.tcmj_71_20.

The Conversation. (2018, May 3) How Artificial Intelligence Can Detect, and Create, Fake News. Neuroscience. Available at: Retrieved June 22, 2022, from https://neurosciencenews.com/ai-fake-news-8951/ (Accessed: 22 June 2022).

TinEye. (2022) How to use TinEye. Available at: https://tineye.com/how (Accessed: 21 October 2022).

Twitter (2020, May 20) Botometer. Available at: https://twitter.com/botometer/status/1263120334695301127?lang=mr (Accessed: 20 October 2022)

Vosoughi, S., Roy, D. and Aral, S. (2018, March) ‘The Spread of True and False News Online’, Science, 359(6380), pp. 1146–1151. https://10.1126/science.aap9559

Watson, P. (2018, April 6) Artificial intelligence is making fake news worse. Available at: https://www.businessinsider.com/artificial-intelligence-is-making-fake-news-worse-2018-4 (Accessed: 22 June 2022).

Wilding, D., Fray, P., Molitorisz, S. and McKewon, E. (2018) The Impact of Digital Platforms on News and Journalistic Content. University of Technology Sydney, NSW. Available at:https://www.accc.gov.au/system/files/ACCC+commissioned+report+-+The+impact+of+digital+platforms+on+news+and+journalistic+content,+Centre+for+Media+Transition+(2).pdf (Accessed: 23 October 2022).

Zhang, Q., Lu, J., and Jin, Y. (2021) ‘Artificial intelligence in recommender systems’, Complex Intell. Syst. 7, pp. 439–457. https://doi.org/10.1007/s40747-020-00212-w

Zubiaga, A., Aker, A., Bontcheva, K., Liakata, M. and Procter, R. (2018) ‘Detection and resolution of rumours in social media: A survey’, ACM Comput. Surv., 51(2), pp. 32-36. https://doi.org/10.1145/3161603